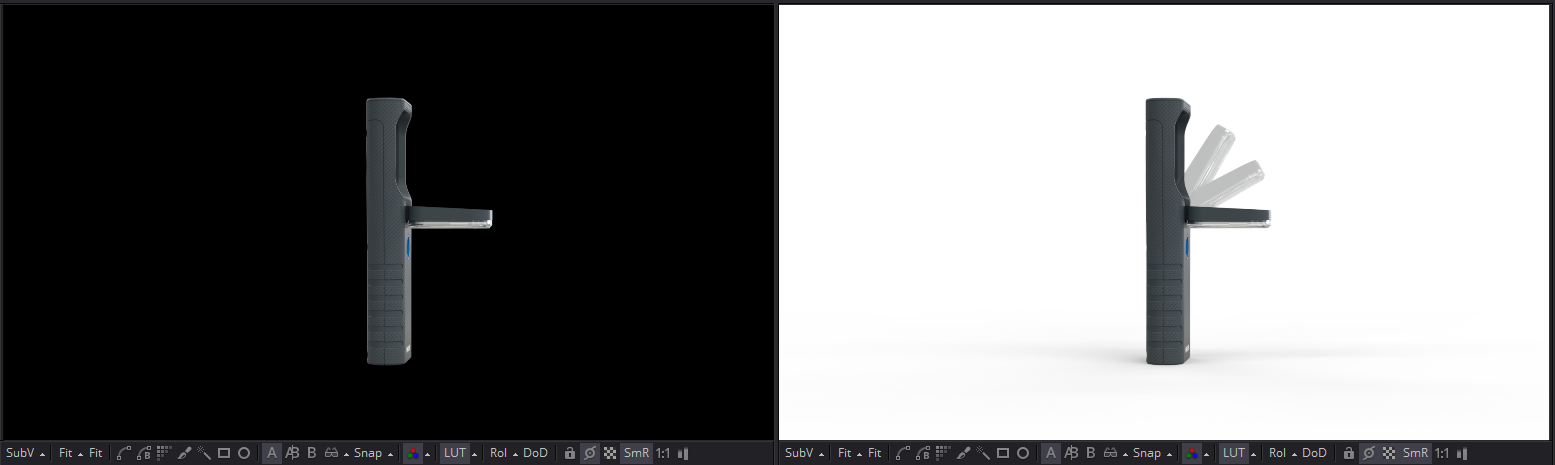

CAD models were provided by the client. As is often the case with .stp and .igs 3D data exchange formats, certain features may not get recognised and surface trims can cause trouble. In MoI3D, that kind of issues were ironed out and unnecessary geometry was removed. Dense .obj meshes were exported and brought over to Blender for animation. Lighting and rendering were done in Octane Render and finally, compositing took place in Blackmagic Fusion.

Particle effects, fluids, textures and sountrack are royalty free stock.

Click on the video to the left to pause it before it's driven you crazy, please.

On another note, that particular scene was built to illustrate the IP (shockproof) rating of the lamp. Unlike the rest of the animation which was keyframed, that scene was simulated using rigid body dynamics. The camera rig was parented to the lamp. The animation was baked and exported to Alembic.

All Alembic scenes were shaded entirely in Octane Render standalone.

On another note, that particular scene was built to illustrate the IP (shockproof) rating of the lamp. Unlike the rest of the animation which was keyframed, that scene was simulated using rigid body dynamics. The camera rig was parented to the lamp. The animation was baked and exported to Alembic.

2D and 3D compositing and final delivery was accomplished in Fusion.

First, a 2D back-to-beauty composite with slight colour corrections.

Shader (re-lighting node which uses shading normal AOVs) nodes were used

to enhance the contrast of the secondary surface details (like the grip

ridges and the housing seams), essentially bringing them out a little bit.

The 'relighting' capabilities of those shader nodes are far from ideal, otherwise.

Then, 3D Alembic scenes (the same files used in Octane) with matching cameras

were hooked to OpenGL renderers to output several info-passes, such as motion

vectors for motion blur and object ID and material ID for generating mattes.

That kind of involved workflow may seem like a little bit of an overkill for the task.

However, given the time frame, there was no possibility for re-renders.

Re-renders are not just time-consuming for the obvious reason of having

to wait for frames to be processed, but also for the increased need for

managing the content and replacing and archiving previous sequences.

Generating passes directly from the Alembic scenes, inside Fusion,

essentially took care of content management right then and there on the fly.

Also, at the time of making, the product had not been released yet and there

was a chance that the final look will be ever so slightly different.